고정 헤더 영역

상세 컨텐츠

본문

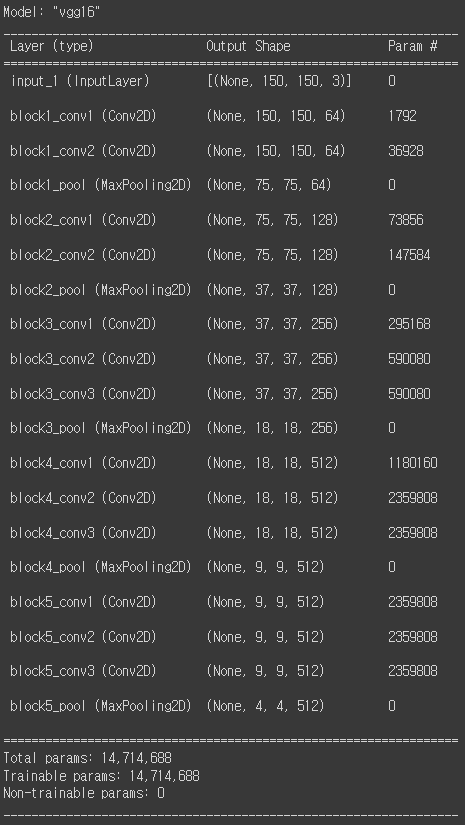

VGG-16 Model

- University of Oxford - Visual Geometry Group

- 2014 ILSVRC 2nd Model

- ImageNet Large Scale Visual Recognition Challenge (ILSVRC)

- 사전 학습된 모델을 사용하여 특성 추출(Feature Extraction)

- Feature Extraction : Parameter 재사용

- Classification : Parameter 학습

1. Image_File Directory Setting

train_dir = 'train'

valid_dir = 'validation'

test_dir = 'test'2. Import VGG-16 Model

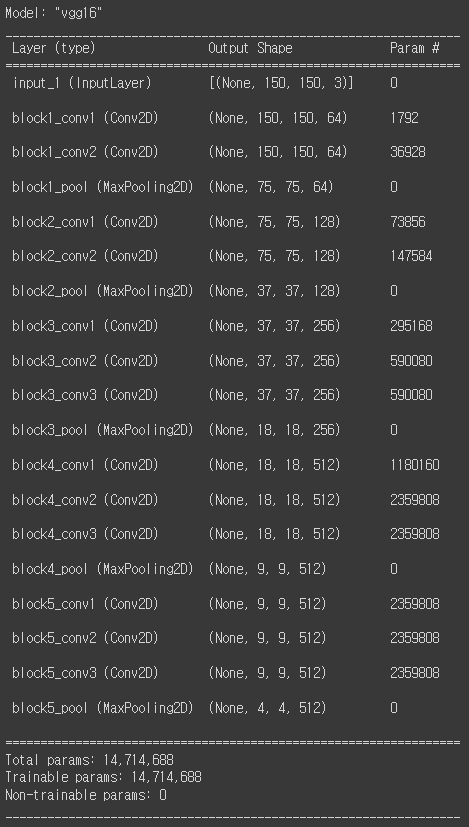

2-1. conv_base

from tensorflow.keras.applications import VGG16

conv_base = VGG16(weights = 'imagenet',

include_top = False,

input_shape = (150, 150, 3))2-2. Model Information

conv_base.summary()

3. Feature Extraction

3-1. 특징추출 함수 정의 : extract_feature( )

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import numpy as np

datagen = ImageDataGenerator(rescale = 1./255)

batch_size = 20

def extract_features(directory, sample_count):

features = np.zeros(shape = (sample_count, 4, 4, 512))

labels = np.zeros(shape = (sample_count))

generator = datagen.flow_from_directory(directory,

target_size = (150, 150),

batch_size = batch_size,

class_mode = 'binary')

i = 0

for inputs_batch, labels_batch in generator:

features_batch = conv_base.predict(inputs_batch)

features[i * batch_size : (i + 1) * batch_size] = features_batch

labels[i * batch_size : (i + 1) * batch_size] = labels_batch

i += 1

if i * batch_size >= sample_count:

break

return features, labels3-2. 특징추출 함수 적용

%%time #1분

train_features, train_labels = extract_features(train_dir, 2000)

valid_features, valid_labels = extract_features(valid_dir, 1000)

test_features, test_labels = extract_features(test_dir, 1000)train_features.shape, valid_features.shape, test_features.shape((2000, 4, 4, 512), (1000, 4, 4, 512), (1000, 4, 4, 512))

3-3. Reshape Features

train_features = np.reshape(train_features, (2000, 4 * 4 * 512))

valid_features = np.reshape(valid_features, (1000, 4 * 4 * 512))

test_features = np.reshape(test_features, (1000, 4 * 4 * 512))

train_features.shape, valid_features.shape, test_features.shape((2000, 8192), (1000, 8192), (1000, 8192))

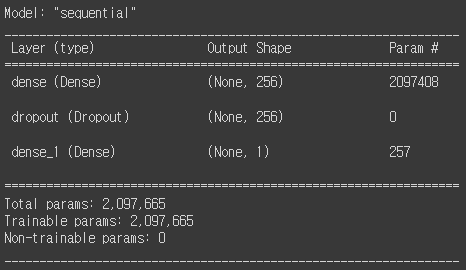

4. Keras CNN Modeling with VGG-16 Featured Data

4-1. Model Define

from tensorflow.keras import models, layers

model = models.Sequential()

model.add(layers.Dense(256, activation = 'relu', input_dim = 4 * 4 * 512))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(1, activation = 'sigmoid'))model.summary()

4-2. Model Compile

model.compile(loss = 'binary_crossentropy',

optimizer = 'adam',

metrics = ['accuracy'])4-3. Model Fit

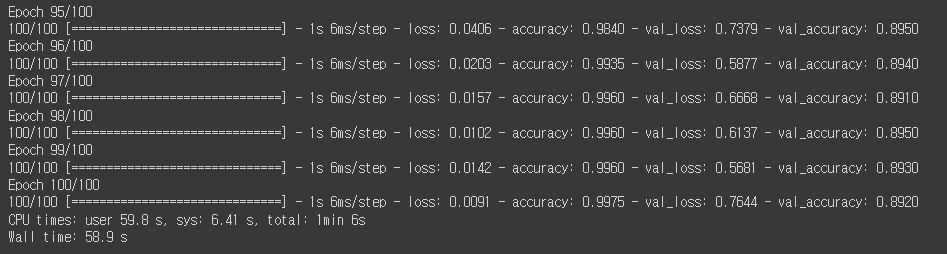

%%time

Hist_dandc = model.fit(train_features, train_labels,

epochs = 100,

batch_size = 20,

validation_data = (valid_features, valid_labels))

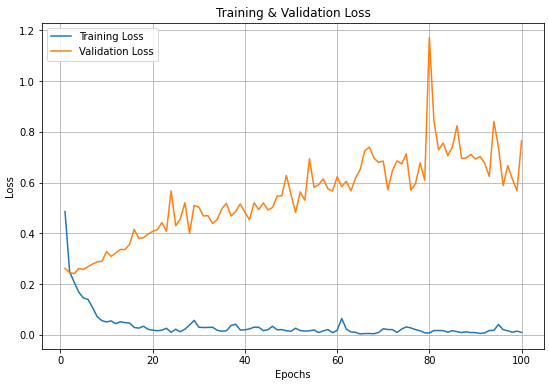

4-4. 학습 결과 시각화

#Loss Visualization

import matplotlib.pyplot as plt

epochs = range(1, len(Hist_dandc.history['loss']) + 1)

plt.figure(figsize = (9, 6))

plt.plot(epochs, Hist_dandc.history['loss'])

plt.plot(epochs, Hist_dandc.history['val_loss'])

plt.title('Training & Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend(['Training Loss', 'Validation Loss'])

plt.grid()

plt.show()

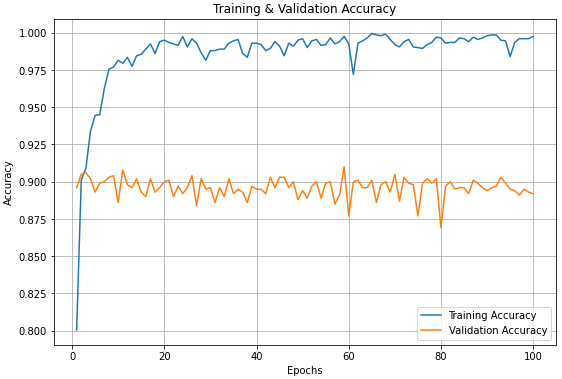

#Accuracy Visualization

import matplotlib.pyplot as plt

epochs = range(1, len(Hist_dandc.history['loss']) + 1)

plt.figure(figsize = (9, 6))

plt.plot(epochs, Hist_dandc.history['accuracy'])

plt.plot(epochs, Hist_dandc.history['val_accuracy'])

plt.title('Training & Validation Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(['Training Accuracy', 'Validation Accuracy'])

plt.grid()

plt.show()

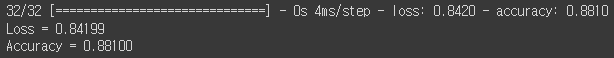

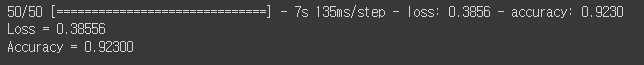

4-5. Model Evaluate

loss, accuracy = model.evaluate(test_features, test_labels)

print('Loss = {:.5f}'.format(loss))

print('Accuracy = {:.5f}'.format(accuracy))

Fine Tunig

1. Data Preprocessing

1-1. ImageDataGenerator( ) & flow_from_directory( )

from tensorflow.keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale = 1./255)

valid_datagen = ImageDataGenerator(rescale = 1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')

valid_generator = valid_datagen.flow_from_directory(

valid_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')2. Import VGG-16 Model & Some Layers Freezing

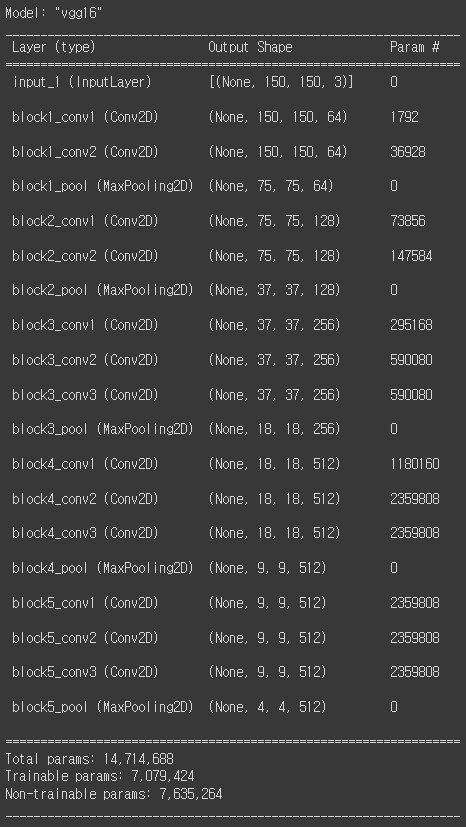

2-1. conv_base

from tensorflow.keras.applications import VGG16

conv_base = VGG16(weights = 'imagenet',

include_top = False,

input_shape = (150, 150, 3))2-2. Model Information

conv_base.summary()

2-3. 'block5_conv1' Freezing

print('conv_base 동결 전 훈련 가능 가중치의 종류:', len(conv_base.trainable_weights))conv_base 동결 전 훈련 가능 가중치의 종류: 26

set_trainable = False

for layer in conv_base.layers:

if layer.name == 'block5_conv1':

set_trainable = True

if set_trainable:

layer.trainable = True

else:

layer.trainable = Falseprint('conv_base 동결 후 훈련 가능 가중치의 종류:', len(conv_base.trainable_weights))conv_base 동결 후 훈련 가능 가중치의 종류: 6

conv_base.summary()

3.Keras CNN Modeling with VGG-16 Freezed Layers

3-1. Model Define

from tensorflow.keras import models, layers

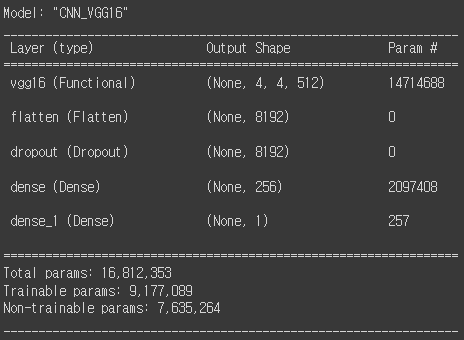

model = models.Sequential(name = 'CNN_VGG16')

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dropout(0.4))

model.add(layers.Dense(256, activation = 'relu'))

model.add(layers.Dense(1, activation = 'sigmoid'))model.summary()

3-2. Model Compile

- 모델 학습방법 설정

- 이미 학습된 Weight 값을 Tuning

- 매우 작은 Learnig Rate 지정

- optimizers.Adam(lr = 0.000005)

from tensorflow.keras import optimizers

model.compile(loss = 'binary_crossentropy',

optimizer = optimizers.Adam(lr = 0.000005),

metrics = ['accuracy'])3-3. Model Fit

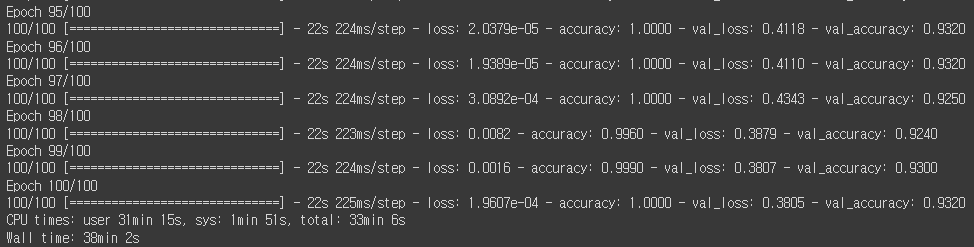

%%time

Hist_dandc = model.fit(train_generator,

steps_per_epoch = 100,

epochs = 100,

validation_data = valid_generator,

validation_steps = 50)

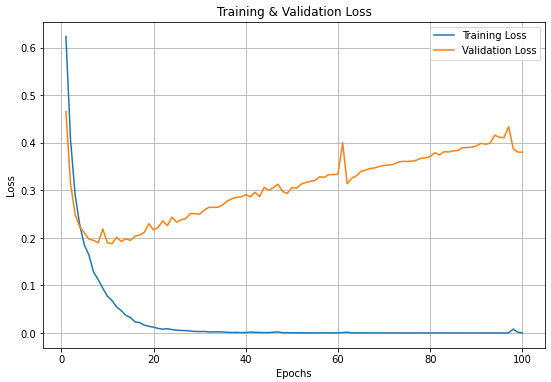

3-4.학습 결과 시각화

#Loss Visualization

import matplotlib.pyplot as plt

epochs = range(1, len(Hist_dandc.history['loss']) + 1)

plt.figure(figsize = (9, 6))

plt.plot(epochs, Hist_dandc.history['loss'])

plt.plot(epochs, Hist_dandc.history['val_loss'])

plt.title('Training & Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend(['Training Loss', 'Validation Loss'])

plt.grid()

plt.show()

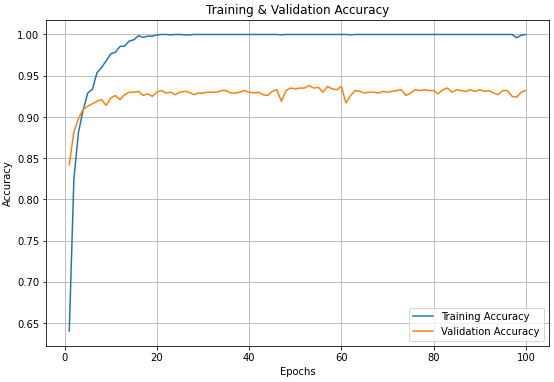

#Accuracy Visualization

import matplotlib.pyplot as plt

epochs = range(1, len(Hist_dandc.history['loss']) + 1)

plt.figure(figsize = (9, 6))

plt.plot(epochs, Hist_dandc.history['accuracy'])

plt.plot(epochs, Hist_dandc.history['val_accuracy'])

plt.title('Training & Validation Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(['Training Accuracy', 'Validation Accuracy'])

plt.grid()

plt.show()

3-5. Model Evaluate

test_datagen = ImageDataGenerator(rescale = 1./255)

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size = (150, 150),

batch_size = 20,

class_mode = 'binary')Found 1000 images belonging to 2 classes.

loss, accuracy = model.evaluate(test_generator,

steps = 50)

print('Loss = {:.5f}'.format(loss))

print('Accuracy = {:.5f}'.format(accuracy))

'인공지능 > 딥러닝' 카테고리의 다른 글

| CNN(Convolutional Neural Network)-CIFAR 10_Functional API Modeling (0) | 2022.06.29 |

|---|---|

| CNN 모델 학습 시각화 (0) | 2022.06.28 |

| CNN(Convolutional Neural Network)-이미지 데이터 셋을 이용한 CNN Modeling (0) | 2022.06.24 |

| CNN(Convolutional Neural Network)-MNIST _Categorical Classification (0) | 2022.06.24 |

| DNN(Deep Neural Network)-MNIST _Categorical Classification (0) | 2022.06.20 |